Hadoop-for-Windows-Eclipse-debug

Windows 下载 hadoop 并安装(如:D:\dev\hadoop)

环境变量配置

HADOOP_HOME=D:\dev\hadoop

HADOOP_USER_NAME=my

PATH=%PATH%;D:\dev\hadoop\bin

hadoop 配置文件

1. 替换 bin 目录:下载 https://github.com/SweetInk/hadoop-common-2.7.1-bin, 备份原有 hadoop/bin, 并用该文件夹替换

2. 替换 etc 目录: 将 hadoop_cluster 任意一台 $HADOOP_HOME/etc 拷贝覆盖到 windows 对应目录

eclipse 配置

将 hadoop-eclipse-plugin-2.7.3.jar 加入 eclipse\plugins

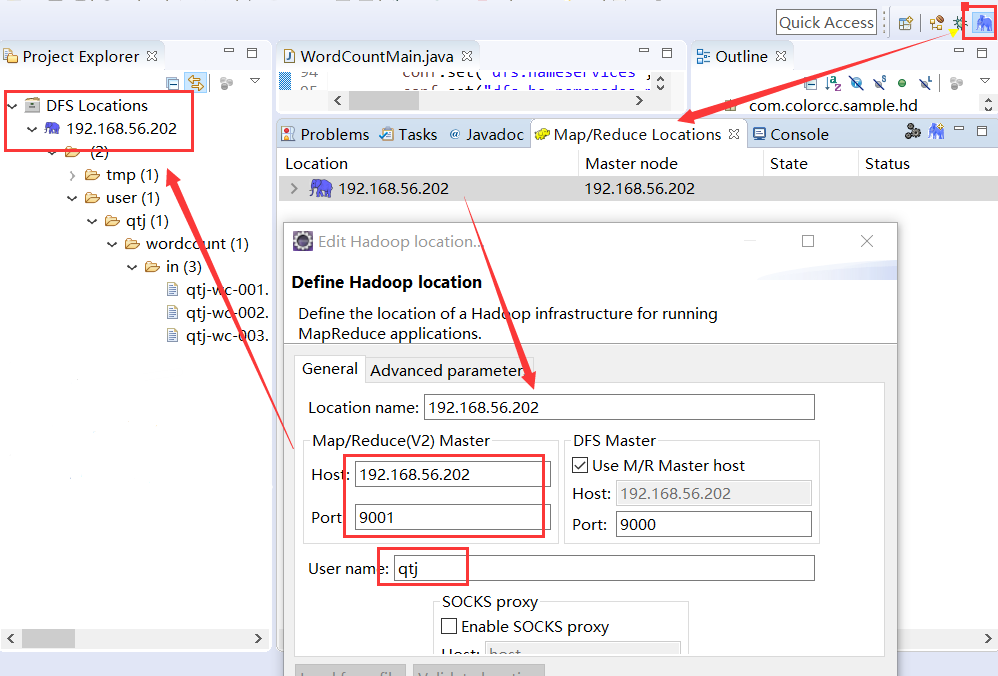

在 eclipse 里选择 map/reduce视图,按下图箭头步骤连接,成功后如左上角所示:

缺少log4j的warning

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

参考网络,增加log4j.properties配置

Connecting to ResourceManager at /0.0.0.0:8032

17/07/13 15:28:08 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

17/07/13 15:28:11 INFO ipc.Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

17/07/13 15:28:13 INFO ipc.Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 1 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

17/07/13 15:28:15 INFO ipc.Client: Retrying connect to server: 0.0.0.0/0.0.0.0:8032. Already tried 2 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS)

将 core-site.xml, hdfs-site.xml, yarn-site.xml, mapred-site.xml 放到 eclipse 执行路径里,如 src/main/resources

Exception message: /bin/bash: line 0: fg: no job control

17/07/13 15:31:40 INFO mapreduce.Job: Job job_1499925703157_0003 failed with state FAILED due to: Application application_1499925703157_0003 failed 2 times due to AM Container for appattempt_1499925703157_0003_000002 exited with exitCode: 1

Failing this attempt.Diagnostics: Exception from container-launch.

Container id: container_e01_1499925703157_0003_02_000001

Exit code: 1

Exception message: /bin/bash: line 0: fg: no job control

Stack trace: ExitCodeException exitCode=1: /bin/bash: line 0: fg: no job control

at org.apache.hadoop.util.Shell.runCommand(Shell.java:972)

at org.apache.hadoop.util.Shell.run(Shell.java:869)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:1170)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:236)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:305)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:84)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Container exited with a non-zero exit code 1

For more detailed output, check the application tracking page: http://qtj001:8088/cluster/app/application_1499925703157_0003 Then click on links to logs of each attempt.

. Failing the application.

17/07/13 15:31:40 INFO mapreduce.Job: Counters: 0

result: 1

代码增加 conf.set("mapreduce.app-submission.cross-platform", "true");

时间 Unauthorized request to start container

15/02/26 16:41:04 INFO mapreduce.Job: map 0% reduce 0%

15/02/26 16:41:04 INFO mapreduce.Job: Job job_1424968835929_0001 failed with state FAILED due to: Application application_1424968835929_0001 failed 2 times due to Error launching appattempt_1424968835929_0001_000002. Got exception: org.apache.hadoop.yarn.exceptions.YarnException: Unauthorized request to start container.

This token is expired. current time is 1424969604829 found 1424969463686

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

主要是 cluster 机器没有进行时间同步,用 root 帐号进行 ntpdate xxx 即可。 自己配置如下:

1. server 机器配置:

server(192.168.56.201) 的 /etc/ntp.conf 增加三行:

restrict 192.168.56.0 mask 255.255.255.0

# 注: 把其他 server 注掉

server 127.127.1.0 fudge

127.127.1.0 stratum 8

2. 重启

systemctl restart ntpd

3. 其他机器同步

ntpdate 192.168.56.201

相关文章

- 基于-SLF4J-MDC-机制的日志链路追踪配置属性

ums: # ================ 基于 SLF4J MDC 机制的日志链路追踪配置属性 ================ mdc: # 是否支持基于 SLF4J MDC

- ajax-跨域访问

ajax 跨域访问 <!DOCTYPE html> <html xmlns:th="http://www.w3.org/1999/xhtml"> <head>

- 给第三方登录时用的数据库表-user_connection-与-auth_token-添加-redis-cache

spring: # 设置缓存为 Redis cache: type: redis # redis redis: host: 192.168.88.88 port

- Java动态代理

Jdk动态代理 通过InvocationHandler和Proxy针对实现了接口的类进行动态代理,即必须有相应的接口 应用 public class TestProxy { public

- Java读取classpath中的文件

public void init() { try { //URL url = Thread.currentThread().getContextClassLo

随机推荐

- 基于-SLF4J-MDC-机制的日志链路追踪配置属性

ums: # ================ 基于 SLF4J MDC 机制的日志链路追踪配置属性 ================ mdc: # 是否支持基于 SLF4J MDC

- ajax-跨域访问

ajax 跨域访问 <!DOCTYPE html> <html xmlns:th="http://www.w3.org/1999/xhtml"> <head>

- 给第三方登录时用的数据库表-user_connection-与-auth_token-添加-redis-cache

spring: # 设置缓存为 Redis cache: type: redis # redis redis: host: 192.168.88.88 port

- Java动态代理

Jdk动态代理 通过InvocationHandler和Proxy针对实现了接口的类进行动态代理,即必须有相应的接口 应用 public class TestProxy { public

- Java读取classpath中的文件

public void init() { try { //URL url = Thread.currentThread().getContextClassLo